CAD Smart Selection

Describe to select complex geometry

Work

Interaction Design

UX/UI

Timeline

3 Days

Role

Interaction Designer

Introduction

When modeling 3D objects in software like Fusion360, Shapr3D, Blender, and many others; selecting complex geometry can be challenging. For this demo, I designed a fictional web-based CAD app with "Smart Selection". Smart Selection gives users the ability to quickly select complex geometry by describing what they're trying to select to an AI.

Fusion360's Selection Tools

Problems

Selecting complex geometry in CAD software is time consuming.

Accidental Selections When selecting specific items in areas with a lot of geometric features, it's really easy to click on the wrong surfaces, edges, or bodies.

Spring Loaded Modality There are a wide array of short-term selection modes the user can enter. These modes help users select and filter multiple objects. However, they require a lot of muscle memory and can easily be "released".

Patterns of Geometry Selecting patterns of geometry is repetitive or requires complex filtering and isolation.

Goal

Make selecting complex geometry as easy as pointing at an object in real life.

The Design

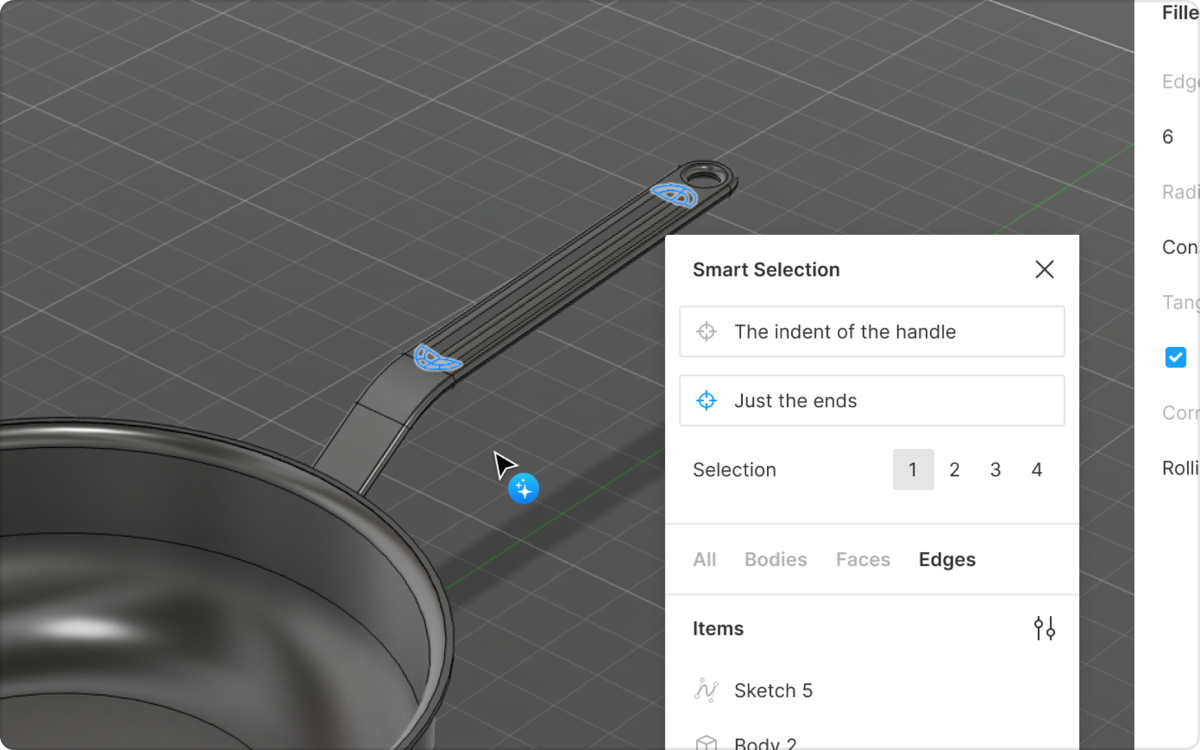

AI-powered describe to select

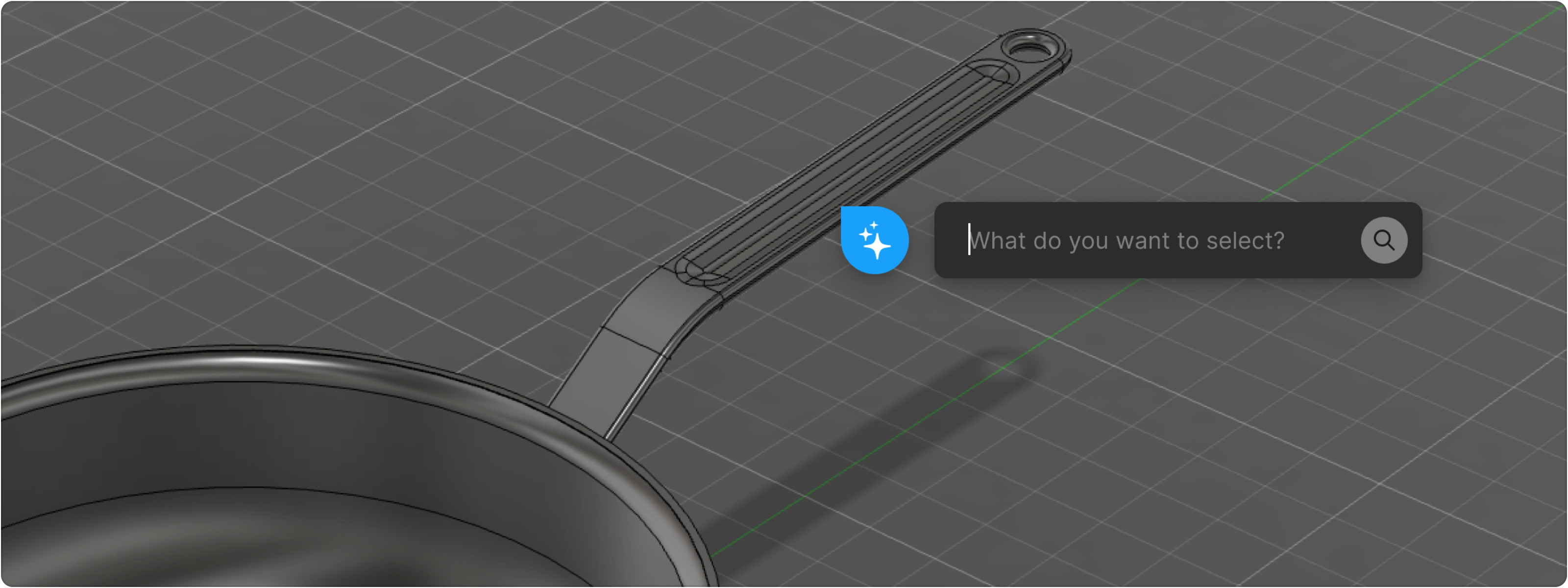

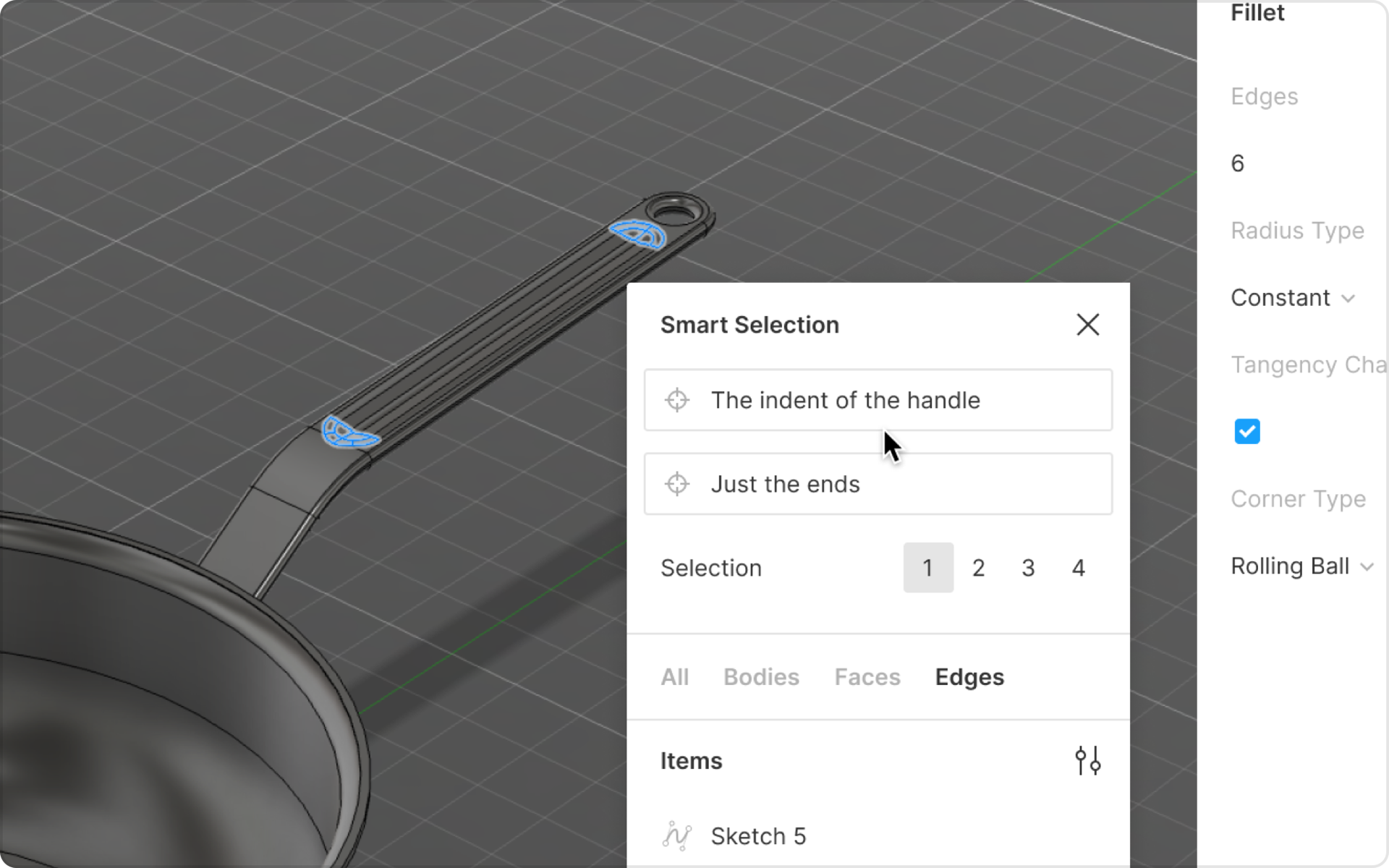

Point & Prompt

Click on an area and tell the AI what you'd like to select.

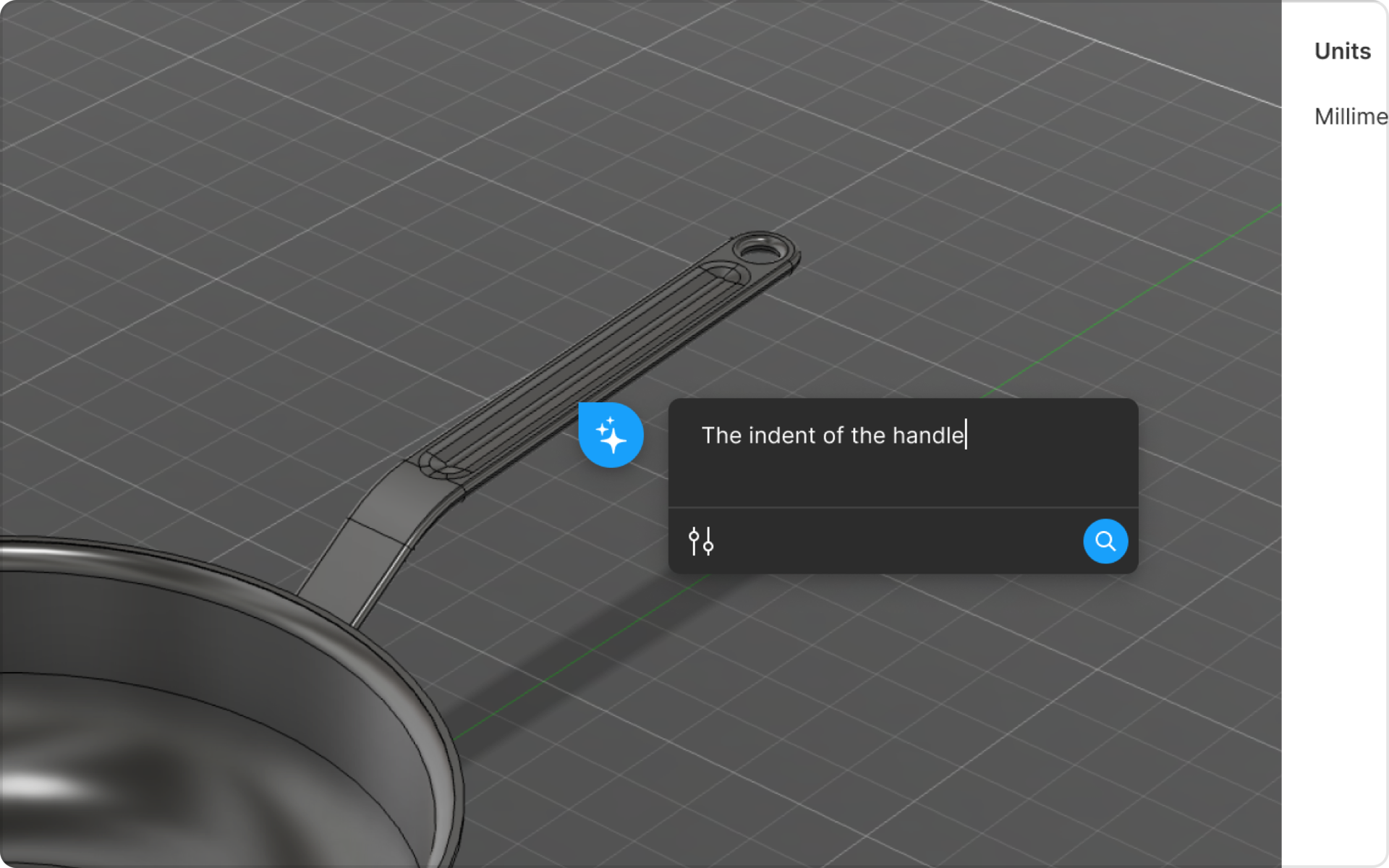

Chain Prompts

Add additional prompts to specify your selection.

Retarget Your Selection

Redefine the area you're trying to select.

Technology

There are two ways an interaction like this can be built. Either with a hybrid multi-modal approach or natively with an LLM.

Hybrid Multi-Modal The most feasible option would be to use LLMs and image recognition. When the user describes their selection, the app would create wireframe renderings at different angles with visible edges only. The description would go through an text-to-text model that generalizes the names of objects in the user's description. The generalized description and renderings would be sent to object-recognition vision model. The vision model would return a selection mask which would be used to select the geometry.

Native LLM The most performant approach would be to create an LLM trained on labeled CAD files. A dataset of labeled CAD files would need to be built to train the model. To continually train the model, we would need permission to train off of user data. This would require that CAD files are stored in the cloud and that users reliably named their geometry.